Services on Demand

Journal

Article

Indicators

-

Cited by SciELO

Cited by SciELO

Related links

-

Similars in

SciELO

Similars in

SciELO

Share

Propósitos y Representaciones

Print version ISSN 2307-7999On-line version ISSN 2310-4635

Propós. represent. vol.11 no.3 Lima Sept./Dec. 2023 Epub Dec 31, 2023

http://dx.doi.org/10.20511/pyr2023.v11n3.1868

RESEARCH ARTICLES

Psychometric Properties of Research Competency Scales: A Systematic Review

1Universidad Peruana Unión, Lima, Perú

2Universidad Nacional Federico Villarreal, Lima, Perú

3Universidad Nacional Mayor de San Marcos, Lima, Perú

4Universidad Nacional de San Martín, Tarapoto, Perú

5Escuela de Educación Superior Pedagógico Público, Tarapoto, Perú

The study aimed to explore the psychometric properties of research competency scales published in the scientific literature in seven databases: Scopus, Web of Science, ERIC, Embase, EBSCO, PubMed and SciELO between 2014 and June 2023. Thirteen papers were systematized, where 11 scales were found that measured content validity using indexes such as the S-CVI, Aiken's V and item-test correlations. Similarly, the results of the exploratory factor analysis (EFA) and confirmatory factor analysis (CFA) presented adequate fit indices such as CFI, GFI, TLI, SRMR and RMSEA, which validated the factor structure. Reliability was mostly evaluated through Cronbach's Alpha coefficient, yielding high and consistent values. In addition, coefficients such as McDonald's Omega, ordinal Omega, Guttman and Spearman Brown have been used, all reflecting a value above .70 and internal consistency in the measurements. In synthesis, instruments such as the RCS, SRCS, RPCS, SPRDS, EAHIF, AHABI, ERL, self-perception questionnaires and rubrics have proven to be effective tools in the assessment and development of research skills. In that sense, these scales can improve the assessment and development of research skills in university students, and it is expected that future research will use them to evaluate pedagogical approaches and international contexts.

Keywords: Research skills; Validity; Reliability; Psychometrics; Systematic review

Introduction

In the context of higher education and academic training, research competencies have become of key importance and are understood as the set of skills, knowledge and attitudes that enable an individual to carry out research in an effective and rigorous manner (Nolazco-Labajos et al., 2022; Torres & Manchego, 2023; Valderrama et al., 2022). These competencies involve the ability to formulate research questions, collect and analyze data, evaluate sources, develop evidence-based arguments, and disseminate results in a clear and coherent manner (Jeréz et al., 2022). The competencies not only equip students with the necessary tools to effectively approach scientific research, but also influence their ability to critically analyze information, synthesize knowledge and make informed decisions (Castellanos & Rios-González, 2017; Tuononen & Parpala, 2021; Vieno et al., 2022). The measurement of these competencies has become an essential issue in today's education, prompting the creation of specific scales for their evaluation.

Several studies have systematized the instruments for measuring research competencies, finding adequate psychometric properties for students, teachers and professionals (Castro-Rodríguez, 2021; Chen et al., 2021). However, these have been presented mainly in higher medical education and their application is found in evidence-based practice and medical education programs (Charumbira et al., 2021; Ianni et al., 2021). Although studies on attitudes towards research have been reported (Hernández et al., 2021; Rodríguez et al., 2023), there is little or no evidence of studies on research competency instruments that psychometrically describe them, despite their increased measurement in higher education in recent years (da Silva et al., 2023; Ipanaqué-Zapata et al., 2023; Kaur et al., 2023; Smith et al., 2020).

A systematic review of the psychometric properties of the scales used to assess research competencies was proposed in this study; due to the fact that their effectiveness depends largely on their validity (content, construct and criterion) and reliability (Echevarría-Guanilo et al., 2019; Zangaro, 2019). In that regard, they allow us to deduce their ability to accurately measure the skills required for the research, so we must be aware of the limitations of the instruments that are applied (McKechnie & Fisher, 2022) and their importance at the time of measurement, to ensure that we choose the best tool for the research question and the target population (Alavi et al., 2022).

Thus, the review not only contributes to a deeper understanding of the tools available to assess research competencies, but also allows the identification of areas for improvement and possible gaps in the current literature. In addition, the results obtained have a direct impact on the practice of higher education, providing valuable information for teachers, researchers and professionals interested in the formation and evaluation of research competencies in university students along with the generation of knowledge. In this regard, through an exhaustive analysis of the existing literature, the objective was to explore the psychometric properties of the research competency scales, providing a comprehensive view of their validity, consistency and ability to predict research performance in education or other disciplines. In addition, the specific objectives focused on the description of the studies found in the scientific literature, the main measurement instruments, the most relevant competencies, the characteristics and types of validity and reliability, and the main limitations.

Therefore, and following the PICO methodology, it was necessary to formulate research questions, considering that the specification of these aspects is an important process of a systematic review (García-Peñalvo, 2022). The research questions were what instruments have been used in psychometric studies in relation to research competencies, since their recognition is essential to obtain results appropriate to the context in question. Another question was what are the characteristics of their validity and reliability, in order to guarantee their applicability and replicability in higher education, professional or other scenarios? Also, what type of validity and reliability were applied in the studies, and what limitations were found in the studies analyzed, which made it possible to elucidate the progress in research available in recent years. Similarly, what research competencies or components have been studied, based on the factors of the instruments in order to obtain a conglomerate for future studies.

Method

Design

The study corresponded to a theoretical type (García-González & Sánchez-Sánchez, 2020) with a systematic review design (Ranganathan & Aggarwal, 2020). The recommendations of the PRISMA guide (Page et al., 2021) were considered for the synthesis of the information found in the bibliographic sources.

Bibliographic Review

Studies were selected from seven databases (Scopus, Web Of Science, ERIC, Embase, EBSCO, PubMed, SciELO) comprised between 2014 and July 2023. The search terms used were “propiedades psicométricas, psychometric properties, psychometric characteristics, validity, reliability, factor structure, competencias investigativas, investigative skills, research competencias, research skills, investigative competencies”. They were limited to empirical studies in their entirety, the recommendations on the references found in full text and those referenced in the database guides as "similar studies were reviewed".

Procedure

Search procedure.

The terms and descriptors combined with Boolean operators AND, OR were entered into the search engines of the databases, resulting in the following search equations (see Table 1):

Selection criteria and processes.

The criteria for inclusion of the documents were: to be a quantitative study published in one of the selected scientific databases, to be between 2014 and June 2023, to have included in its population and/or sample participants who are at least in higher education (undergraduate or graduate students, teachers, professionals of any career), to have evaluated any of the psychometric properties, to have considered studies focused on the evaluation of research competencies and/or skills, limiting the participation of scales of "attitude" towards research. Similarly, only those studies to which full access was available were considered and no restriction was applied to the language of the documents found, as well as to the dimension of the instruments.

Table 1 Database search process

| Database | Search equation |

|---|---|

| Scopus | [TITLE-ABS-KEY ("Psychometric properties" OR "Psychometric characteristics" OR validity OR reliability OR "Factor structure" AND "investigative powers" OR "investigative skills" OR "research competencies" OR "research skills" OR "investigative competencies" )], |

| Web of Science | [TI=(Psychometric properties AND investigative skills OR research competencies)] |

| ERIC | [TI Psychometric properties OR TI Psychometric characteristics AND TI "investigative powers" OR TI "investigative skills" OR TI "research competencies" OR TI "competencias de investigación"] |

| Embase | [('psychometric properties'/exp OR 'psychometric properties' OR 'psychometric characteristics') AND 'investigative skills' OR 'research competencies' OR 'research skills'] |

| EBSCO | [TI (psychometric properties or validity or reliability) AND TI investigative skills OR TI research competencies OR TI research skills for students OR TI research skills] |

| PubMed | [((psychometrics[Title/Abstract]) AND (investigative skills[Title/Abstract])) OR (research skills[Title/Abstract])] |

| SciELO | [(ti:(competencias de investigación AND propiedades psicométricas))] |

Source: Elaborated by the author.

It is important to mention that for the selection of the instruments, a review of the theoretical foundations that support the structure of each one of them was carried out; also, that they have at least the criteria of a quantitative study. In addition, for the selection of the articles, three of the researchers (CTM, NPPT and SLPT) carried out the screening processes for the coherence of the inclusion of the documents, thus minimizing the existence of bias due to the social desirability of any of the authors, denoting quality in the systematization process, following the recommendation of the PRISMA checklist (Page et al., 2021).

Coding of articles.

For the classification of the documents found, the documentary analysis (Bracho et al., 2021) was applied according to the design of the data collection matrix, using Microsoft Excel ® where the authors, country, objective, sample, career, instruments, number of items, dimensions and/or factors, main results, conclusions, and limitations of the studies were contemplated. The data entered were sent to two external researchers to verify their correspondence. Two scales measuring attitudes toward research were discarded as a predictor of research competency development.

Data analysis

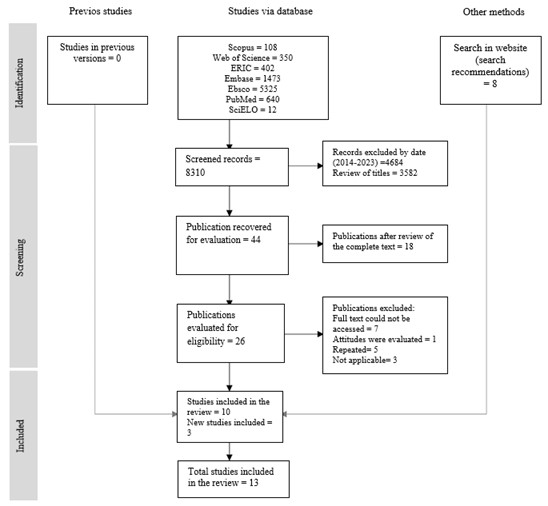

For the data collection matrix, Figure 1 summarizes the search process followed. Initially, the databases registered 8,318 articles, but after applying the temporality and exclusion criteria, only 26 documents were registered. Repeated articles, those that were not accessed in full text and did not focus on the variables were purged, systematizing only 13 documents.

Results

Table 2 reflects the main characteristics of the selected articles. Nine articles mainly evaluated the psychometric properties of the research competency scales, while the other four focused on the design and validation of these scales. Six out of 13 studies were conducted in South America, four of which correspond to Peru; the scales were evaluated with 6,922 students in health sciences, engineering, business, communication sciences and graduate studies. The majority of the participants were women; in addition, three studies evaluated the instruments psychometrically with teachers and one study did so with professionals.

Table 2 Characteristics of systematized articles

| Authors | Sample | Career | Instruments |

|---|---|---|---|

| Qiu et al. (2019) / China | 146 undergraduate students (88.36% female and 11.64% male) | Nursing | Research Competency Scale (RCS-N). 24 items, one-dimensional |

| Cobos et al. (2016) / Ecuador | 150 students (75.3% male and 24.7% female) | Engineering | Self-assessed skills for inquiry-based learning (AHABI). 20 items, three factors and/or dimensions. |

| Ipanaqué-Zapata et al. (2023) / Perú | 1598 students (70.53% female and 29.47% male) | Not specified | Self-perception of research skills instrument. 8 items, one-dimensional. |

| Merino-Soto et al. (2022) / Perú | 307 students (72.3% female and 27.7% male) | Psychology | Research Perceived Competency Scale (RPCS). 4 items, one-dimensional |

| Duru & Örsal (2021) / Turquía | 937 professionals (85.7% women and 14.3% men) | Nursing | Scientific Research Competency Scale (SRCS). 57 items, four factors and/or dimensions |

| Böttcher-Oschmann et al. (2019) / Alemania | 536 students | Graduate | Fragenbogens zur Erfassung studentischer Forschungskompetenzen. 32 items, four factors and/or dimensions. |

| Swank & Lambie (2016) / Estados Unidos | 379 participants (64% women, 35% men and 1% others) | Graduate | Research Competencies Scale (RCS). 54 items, six factors and/or dimensions. |

| Rockinson-Szapkiw (2018) / Estados Unidos | 433 students | Graduate | Scholar-practitioner research development scale (SPRDS). 24 items, five factors and/or dimensions. |

| Cota & Beltran-Sanchez (2021) / México | 124 teachers (54.8% female 45.2% male) | Not specified | Research Competencies Scale (RCS). 46 items, four factors and/or dimensions. |

| Guerrero-Narbajo et al. (2023) / Perú | 1260 students (56.98% females and 43.02% males) | Engineering, Social Sciences and Nursing | Self-Assessment Scale for Formative Research Skills (EAHIF). 17 items, three factors and/or dimensions. |

| Aliaga-Pacora et al. (2021) / Perú | 38 thesis advisors | Graduate | Socioformative rubric to evaluate research competencies in graduate studies. 11 items, five factors and/or dimensions. |

| Groß et al. (2017) / Alemania | 2113 students | Communication sciences | Educational Research Literacy (ERL). 22 items, four factors and/or dimensions. |

| Hernández et al. (2021) / Colombia | 32 teachers (56.3% female and 43.7% male) | Not specified | Scale to evaluate research competencies. 31 items, three factors and/or dimensions. |

Source. Elaborated by the author.

Instruments used

Three out of 13 studies applied the Research Competence Scale (RCS) (Cota & Beltran-Sanchez, 2021; Duru & Örsal, 2021; Swank & Lambie, 2016) where one-dimensional six- and four-factor constructs were evidenced. Similarly, short scales of four items were evidenced in the case of the Research Perceived Competence Scale (RPCS) (Merino-Soto et al., 2022) followed by the Research Skills Self-Perception Instrument with 8 items (Ipanaqué-Zapata et al., 2023). The most extensive instruments were composed of 46, 54 and 57 items, as well as 11 instruments that are applied both at the undergraduate and graduate levels.

Validity characteristics of the instruments

Content validity.

According to Table 3, seven out of 13 items reported having content validity employing the scale-level content validity index (S-CVI) with values above .95 as well as inter-item correlations ranging from .80 to 1 (Qiu et al., 2019). Likewise, Merino-Soto et al. (2022) found high covariance between items with values ranging between .81 and .89, while Duru & Örsal (2021) found correlations between .61 and .83 that were also found to be significant. The content validity was carried out by expert judges, where the values obtained in terms of agreement between the criteria by means of Aiken's V, Fleis' Kappa coefficient and Kendall's W reflected values above .80, except for Kendall's W. Nevertheless, the values were adequate in its three components [.66; .64; .69] (Aliaga-Pacora et al., 2021; Cota & Beltran-Sanchez, 2021; Guerrero-Narbajo et al., 2023; Hernández et al., 2021).

Table 3 Psychometric evidence of the articles (n = 13)

| Authors | Content validity | Construct validity | Reliability |

|---|---|---|---|

| Qiu et al. (2019) | Content validity using the overall S-CVI of the RCS-N was .98. | The CFA showed a two-dimensional model, with adequate fit indices χ2 (df = 54) = 99.91 (p < .001), RMSEA = .08; CFI = .98; SRMR = .02, r2 = .98. | α = .98 global |

| Cobos et al. (2016) | Not specified | The KMO sample adequacy index reached the value of .891 and Bartlett's test of sphericity registered 1429.971. (p <.001). Three factors explain 54.85% of the total variance. | α = .91 on the full scale; F1 = .89; F2 = .71 and F3 = .69 |

| Ipanaqué-Zapata et al. (2023) | Not specified | The AFA showed that the factor loadings for the unifactorial model are adequate (λ ≥ .49). Similarly, the CFA showed that the fit indices were adequate (χ2 = 404.35; CFI = .99; TLI = .981; SRMR = .04). The instrument is invariant according to gender and age. Normative data were low (8-17 points), medium (18-22 points) and high (23-24 points). | α = .92 global; α ordinal = .96 y Ω = .90 |

| Merino-Soto et al. (2022) | As for the correlations, it was observed that the covariation among the items was high, varying between .81 and .89, indicating approximately 71.6% common variance. | The linear fit to the one-dimensional RPCS model was satisfactory: WLSMV- χ2 (2) = 31.28, p < .01, CFI = .99, SRMR = .02. In terms of convergent validity, the RPCS score is associated with satisfaction with studying and general anxiety symptoms. | Ω = .96; α = .96 global. |

| Duru & Örsal (2021) | The corrected item-total correlation coefficients ranged from .61 to .83, in that sense all items were statistically significant. | The EFA grouped the 57 items into four subdimensions, with factor loadings between .62 and .79, and explained 69.87% of the total variance. / SRCS is negatively related to scores on the ASTSR subdimensions 'reluctance to help researchers (r = -.33) and negative attitude toward research (r = -.34), and ASTR scores (r = -.54). It is positively related to the ASTSR subdimensions (p < .001), which are positive attitude toward research (r = .64) and positive attitude toward researchers (r = .47). | SRCS global [α = .99, Guttman = .94, SB = .94]; F1 = [α .98, Guttman = .97, SB = .97]; F2 = [α = .97, Guttman = .95, SB = .96], F3 = [α = .94, Guttman = .95, SB = .95] F4 = [α = .91 Guttman = .82, SB = .90] |

| Böttcher-Oschmann et al. (2019) | Not specified | The research competencies model presents adequate fit indices (χ2 (2267) = 3877.84; p <.001; χ2/df= 1.71; CFI = .90; RMSEA = .04; SRMR = .06 | α ≥ .80 |

| Swank & Lambie (2016) | Not specified | EFA using Bartlett's test for sphericity showed values of (χ2 = 26042,26, gl = 1431, p < .001) and a meritorious KMO value (.96); in addition, the RCS factors explained 76.86% of the variance. | α = .98 global; [F1 = .98; F2 = .96; F3 = .95; F4 = .94; F5 = .92; F6 = .96] |

| Rockinson-Szapkiw (2018) | Not specified | The EFA grouped five factors, which together explained 78.5% of the total variance. | SPRDS α = .93; F1 = .90, F2 = .93, F3 = .88, F4 = .82, F5 = .85 |

| Cota & Beltran-Sanchez (2021) | Content validity was assessed by the judgment of five experts; inter-judge agreement was calculated using Fleis' Kappa coefficient, where the results were satisfactory (k = .08; p = .04) | The four subscales, by means of EFA, evidenced communalities greater than .30; factor loadings greater than .50, KMO values greater than .80; with an explained variance greater than 50%. [F1: KMO = .90, 75% variance; F2: KMO = .80, 53% variance; F3: KMO = .91; 81% variance; F4: KMO = .89, 82% variance] | α for subscales F1 = .97; F2 = .88; F3 = .96; F4 = .95 |

| Guerrero-Narbajo et al. (2023) | Content validity through expert judgment, obtaining an Aiken V greater than .80 for the items. | The KMO value reached for the EFA was .99; three factors explained 47% of the variance. The CFA showed adequate fit indices [χ2/gl = 1.24; GFI = .99; RMSEA = .02; SRMR = .04; CFI = .99; TLI = .99] | α = .90; Ω = .90 global; F1 = .81, .81; F2 = .81, .81; F3 = .72, .72 |

| Aliaga-Pacora et al. (2021) | Aiken's V > .80; 95% CI VI > .75 for item relevance and wording. | Not specified | α = .83 global |

| Groß et al. (2017) | Not specified | The factor analysis showed that the bifactor model was the most appropriate. | Ω = .92 y el Ωh = .87 global |

| Hernández et al. (2021) | Kendall's W concordance analysis in all three dimensions [.66; .64; .69] | Not specified | α = .84 global; F1 = .89; F2 = .91; F3 = .89 |

Source. Elaborated by author.

Construct validity.

According to Table 3, 11 out of 13 articles were found to have psychometrically evaluated the instruments using factor analysis to demonstrate the construct validity of the scales. Seven of these performed the EFA with KMO values > .80 with variance explained according to the factors between 47% and 76.86% (Cobos et al., 2016; Cota & Beltran-Sanchez, 2021; Duru & Örsal, 2021; Guerrero-Narbajo et al., 2023; Ipanaqué-Zapata et al., 2023; Rockinson-Szapkiw, 2018; Swank & Lambie, 2016). According to the AFC, the Research Competencies Scale (RCS-N), Research Perceived Competencies Scale (RPCS), the Self-Perceived Research Skills Instrument, the Questionnaire for Assessing Students' Research Competencies, the Educational Research Literacy Scale (ERL) presented adequate fit indices to the proposed models with values of CFI ≥ .90; GFI ≥ .95; TLI ≥ .95, SRMR < .060 and RMSEA < .05, which demonstrated having factorial structure. (Böttcher-Oschmann et al., 2019; Groß et al., 2017; Guerrero-Narbajo et al., 2023; Ipanaqué-Zapata et al., 2023; Merino-Soto et al., 2022; Qiu et al., 2019). Only the self-perceived research skills instrument performed the invariance analysis at the sex and age level; in addition, it had normative data according to its direct score, being low [8-17], medium [18-22] and high [23-24]. Moreover, the RPCS and SRCS scales performed construct validity in relation to other variables, thus demonstrating a higher level of consistency.

Reliability.

Table 3 shows that the Cronbach's Alpha coefficient has been used for 100% of the instruments that measure research competencies and/or skills, both at the global level and for the dimensions or factors. Coefficients were obtained between .83 and .99 at the global level and between .69 and .98 for the dimensions. However, McDonald's Omega coefficients (Ω), ordinal Omega (Ωh), Guttman and Spearman Brown (SB) were used and adequate values that meet internal consistency criteria were reached,

According to Table 4, it has been found that the RCS instruments evaluated by Cota & Beltran-Sanchez (2021); Qiu et al. (2019); and Swank & Lambie (2016) proved to be a study tool with adequate psychometric properties. However, it cannot replace written or oral exams to assess students' competencies. In that regard, it is a tool that helps to identify research strengths and recognize areas to focus on to further develop research competencies at both the undergraduate and graduate levels. Similarly, the SRCS, RPCS, SPRDS, EAHIF, AHABI, ERL, the self-perception of research skills instrument, the questionnaire to record students' research skills, the socioformative rubric to evaluate research skills in graduate studies, and the scale to evaluate research skills have adequate levels of validity and reliability; however, more rigorous studies are required to demonstrate the constructs and invariance.

Table 4 Main conclusions and limitations encountered (n = 13)

| Authors | Conclusions | Limitations |

|---|---|---|

| Qiu et al. (2019) | The RCS-N developed is a promising, valid, and reliable tool for assessing the research competence of nursing students. | Small sample size. |

| Cobos et al. (2016) | A valid instrument has been generated to measure the learning of research skills. | Not specified |

| Ipanaqué-Zapata et al. (2023) | The research skills scale is valid and reliable for Peruvian university students of both sexes and age groups in an E-Learning environment. | The RMSEA value was >.08; similarly, the research skills instrument was used to assess students' self-perceptions of their research skills; non-probability convenience sampling. |

| Merino-Soto et al. (2022) | The instrument has adequate psychometric properties, i.e., excellent fit indices. They were evaluated by non-parametric and parametric methodologies; moreover, the brevity of this instrument, and the satisfactory validity evidence obtained, indicate that this new adaptation can contribute significantly to the teaching of research. | The sampling of the participants did not ensure that they were representative of the population. |

| Duru & Örsal (2021) | The developed SRCS is a valid and reliable self-assessment tool that can be used to determine the scientific research competencies of nurses with undergraduate or graduate education. | Convenience sampling: the absence of a parallel form, in which the validity and reliability study was conducted in the language in which the scale was prepared and measures research competence. Also, the length of the scale. |

| Böttcher-Oschmann et al. (2019) | The F-Komp is an instrument with which self-assessed research skills can be recorded in contrast to learning skills. | Sample size and selection. |

| Swank & Lambie (2016) | The findings indicate that the RCS is a promising instrument for counselor educators and doctoral students to use in self-assessment, and the RCS may also prove useful for educational and evaluation purposes. | There was no adequate distribution among the participants. |

| Rockinson-Szapkiw (2018) | Evidence is found that the SPRDS has validity and reliability as an instrument to assess research competencies in doctoral students. | Limited number of programs and universities. Homogeneity of the sample (students were pursuing degrees in the field of education). |

| Cota & Beltran-Sanchez (2021) | The instrument has been shown to have empirical sustainability. | Not specified |

| Guerrero-Narbajo et al. (2023) | The EAHIF presents evidence of content and construct validity and adequate reliability. | The instrument was only applied to students, the absence of a representative sample. |

| Aliaga-Pacora et al. (2021) | The research competencies instrument for graduate students has adequate levels of validity and reliability. | Absence of construct analysis, sample size. |

| Groß et al. (2017) | The results indicate that the four-dimensional bifactor model was the most appropriate: The ERL appears to consist of one dominant factor and three secondary factors. | Not specified |

| Hernández et al. (2021) | The instrument has an adequate level of validity. | Not specified |

Source. Elaborated by the author.

Among the main findings, the 13 articles reviewed included deficiencies centered mainly on the sample size (5 studies analyzed instruments with fewer than 200 participants) and the selection method (100% non-probability convenience). Also, 30.7% of the documents reviewed did not characterize the study sample. The results also reveal that only six articles have carried out the CFA, minimizing the possibility of having tools for screening with respect to diverse contexts. Similarly, 76.9% of the articles considered different undergraduate and graduate areas for the evaluation of research competencies.

After analyzing Table 2 and Table 4, in general terms, the main research competencies and/or skills found were formulated in relation to the dimensions, indicators or elements provided by the instruments. In this regard, five main components were grouped that can be applied to measure the variable. Starting with the competence factor itself, followed by skills, knowledge of research methods and ethics, as well as presentation of results and dissemination (Table 5).

Discussion

The aim of this study was to explore the psychometric properties of the research competency scales, providing a comprehensive view of their validity, consistency, and ability to predict research performance. An evaluation production has been found in the South American context, these results are related to the various published works regarding the measurement of research competencies in recent years (Torres & Manchego, 2023; Valderrama et al., 2022) which evidences a significant need and/or interest in the science scenario. In this regard, the evaluation of research competencies indicates that it is a construct considered very important for the promotion of critical thinking and evidence-based decision making (Castro-Rodríguez, 2023).

Table 5 Proposed factors for measuring research competencies.

| Factors and/or dimensions | Evaluation indicators |

|---|---|

| Competencies | Identification and organization of information |

| Literature review | |

| Information literacy | |

| Statistical competence | |

| Evidence-based reasoning | |

| Scientific generation of knowledge | |

| Skills | Information processing and management |

| Writing skills | |

| Preparation of scientific information | |

| Methodological skills | |

| Application of instruments | |

| Reflection skills | |

| Research methods and ethics | Qualitative Research |

| Quantitative research | |

| Sample selection methods | |

| Knowledge of research ethics and integrity | |

| Reporting and presentation of results | Introduction |

| Methodology | |

| Results | |

| Final report | |

| Collaborative work | |

| Research dissemination | Communication of results |

| Dissemination of results | |

| Research value |

Source. Elaborated by the author.

As a result of the review, it was found that the main scale for measuring research competencies and/or skills was the RCS, which had the greatest number of psychometric analyses; however, it also presented diverse factorial structures, which makes it impossible to define the dimensions in question accurately. For this reason, it is related to the information raised by Swank & Lambie (2016) who point out that internal structure dilemmas make further exploration of psychometric analysis necessary. In addition, short questionnaires are presented as alternatives that have adequate psychometric properties (Aliaga-Pacora et al., 2021; Ipanaqué-Zapata et al., 2023; Merino-Soto et al., 2022).

As for content validity, several methods have been used, such as the CVI, item-test correlation, Aiken's V, Fleis' Kappa and Kendall's W to affirm that the items proposed measure the variables. It is related to the study proposed by Almanasreh et al. (2019) who refer that the content validity is an essential factor in the psychometric evaluation of the instruments. For this reason, in order for the scales in the measurement of competencies to be optimal, rigorous evaluation is necessary. Because the standardization of the instruments is a necessary confidence for the accuracy of the results to be obtained, Madadizadeh & Bahariniya (2023) refer that robust methods are required with the content validity rate (CVR) and the CVI because of their relevance for the appropriateness of an item to the overall scale. In that regard, failure in the application or measurement of content validity is related to the scarcity of information in the literature for its procedure (Newman, et al., 2013). However, it is this process that ensures a better fit from a qualitative and mixed perspective for the consideration of an item, not just waiting for a statistical calculation result.

Regarding construct validity, the reviewed documents have used both EFA, CFA, invariance and correlation with other variables (discriminant and convergent) that have made it possible to present adequate psychometric measurement properties of the variables. However, many of them have only remained exploratory evaluations. As is known in the field of education, it is necessary to have tools with optimal evaluation processes, therefore, it is necessary to test the quality of the instruments by means of more robust methods or coefficients. The CFA represents a fundamental process to be carried out, as indicated by Escobedo et al. (2016) who point out that the CFA can correct or corroborate, if any, the shortcomings of the EFA, leading to further testing of the specified hypotheses. In addition, Buntins et al. (2021) refer that to use the instruments it is necessary the existence of convergence with others, since they represent adequate psychometric values together with those already widely used of the EFA and CFA as referred by Maric et al. (2023) who refer that they are the most outstanding in the evaluation of the internal structure.

Of the reliability of the instruments, 100% of them used Cronbach's alpha for estimation, which although they recorded adequate values above .70 (da Silva et al., 2015; Oviedo & Campo-Arias, 2005; Toro et al., 2022) differ from the analyses or assumptions of application of them as referred by Zakariya (2022) who states that in order to apply Cronbach's alpha it is necessary to identify whether the instruments possess one-dimensionality, since their indiscriminate use may underestimate or overestimate the tests evaluated. Under this reality, it is necessary to develop more robust measures such as McDonald's Omega, which allows a better estimation of precision and replicability (Ventura-León & Peña-Calero, 2020; Xiao & Hau, 2022).

The studies as a whole have grouped four main limitations, the first related to the sample size, because they did not reach the expected representativeness for the measurement or confirmation of the models, as well as the calculation of non-probability or convenience sampling. These results are related to the information raised by Andrade (2021) and Jager et al. (2017) because the absence of a sampling calculation process generates biases and these may not be representative of the target population.

The exploration of the documents for the systematization has presented several limitations. First, it is very likely that not all the scales for measuring research competencies have been included. However, in order to mitigate the risk, the selection of a broad database was considered, without excluding language or even scope of application, so that a correct search and selection process was considered. Next, the absence of a comprehensive normative evaluation criterion for the inclusion of documents such as the COSMIN checklist (Mokkink et al., 2010) was considered as a limitation. Another limitation was that systematization was not possible due to lack of access to three relevant documents (Cater et al., 2016; Gess et al., 2019; Mallidou et al., 2018) which reduced the number of documents. In addition, the properties of the scales that measure attitude towards research (Barrios & Ulises, 2020; Gros et al., 2022; Howard & Michael, 2019; Roberts & Povee, 2014) necessary to address diverse contexts of higher education and to broaden a greater picture of research competencies were not considered, since it is a predictor for obtaining information or learning.

Based on the results of the study, it is considered necessary to continue providing information on psychometric properties in future studies in higher education. Validated scales can be implemented in academic programs to assess and develop skills, this could contribute to the improvement of the quality of higher education and prepare students more effectively for research. Meanwhile, the grouping into five factors (competencies, skills, research methods and ethics, reporting and presentation of results, and dissemination of research) could serve to design a more complex instrument for applicability in concrete scenarios.

Conclusions

It was found that nine studies evaluated the psychometric properties of research competency scales/questionnaires and four of these were designed and validated. The studies mainly come from South America with 6922 participants. The scale that stood out the most with three studies was the RCS, the content was validated by means of the CVI coefficient, Aiken's V, Fleis' Kappa coefficient and Kendall's W. The construct was validated mainly by means of the EFA The construct was validated mainly through the EFA and adequate fit indices obtained in the CFA (CFI ≥ .90; GFI ≥ .95; TLI ≥ .95, SRMR < .060 and RMSEA < .05). As for internal consistency, Cronbach's Alpha coefficient was used for the most part, together with McDonald's Omega index, ordinal Omega, Guttman and Spearman Brown. In this regard, the scales and questionnaires found are very useful for the measurement of research competencies.

Acknowledgments

The authors thank the researchers involved for their time and effort.

REFERENCES

Alavi, M., Hunt, G. E., Thapa, D. K., & Cleary, M. (2022). Conducting Systematic Reviews of the Quality and Psychometric Properties of Health-Related Measurement Instruments: Finding the Right Tool for the Job. Issues in Mental Health Nursing, 43(4), 317-322. doi: 10.1080/01612840.2021.1978599 [ Links ]

Aliaga-Pacora, A. A., Juárez-Hernández, L. G., & Herrera-Meza, R. (2021). Diseño y validez de contenido de una rúbrica analítica socioformativa para evaluar competencias investigativas en posgrado. Apuntes Universitarios, 11(2), 62-82. doi: 10.17162/au.v11i2.632 [ Links ]

Almanasreh, E., Moles, R., & Chen, T. F. (2019). Evaluation of methods used for estimating content validity. Research in Social and Administrative Pharmacy, 15(2), 214-221. doi: 10.1016/j.sapharm.2018.03.066 [ Links ]

Andrade, C. (2021). The Inconvenient Truth About Convenience and Purposive Samples. Indian Journal of Psychological Medicine, 43(1), 86-88. doi: 10.1177/0253717620977000 [ Links ]

Barrios, E., & Ulises, D. (2020). Diseño y validación del cuestionario “Actitud hacia la investigación en estudiantes universitarios”. Revista Innova Educación, 2(2), 280-302. doi: 10.35622/j.rie.2020.02.004 [ Links ]

Böttcher-Oschmann, F., Groß, J., & Thiel, F. (2019). Validierung eines Fragenbogens zur Erfassung studentischer Forschungskompetenzen über Selbsteinschätzungen - Ein Instrument zur Evaluation forschungsorientierter Lehr-Lernarrangements. Unterrichtswissenschaft, 47(4), 495-521. doi: 10.1007/s42010-019-00053-8 [ Links ]

Bracho, M. S., Fernández, M., y Díaz, J. (2021). Técnicas e instrumentos de recolección de información: Análisis y procesamiento realizado por el investigador cualitativo. Revista Científica UISRAEL, 8(1), 107-121. doi: 10.35290/rcui.v8n1.2021.400 [ Links ]

Buntins, K., Kerres, M., & Heinemann, A. (2021). A scoping review of research instruments for measuring student engagement: In need for convergence. International Journal of Educational Research Open, 2, 100099. doi: 10.1016/j.ijedro.2021.100099 [ Links ]

Castellanos, Y. A., & Rios-González, C. M. (2017). The importance of scientific research in higher education. Medicina Universitaria, 19(74), 19-20. doi: 10.1016/j.rmu.2016.11.002 [ Links ]

Castro-Rodríguez, Y. (2021). Systematic review of the instruments for measuring research skills in higher medical education. Revista Habanera de Ciencias Médicas, 20(2), 1-14. https://www.medigraphic.com/cgi-bin/new/resumenI.cgi?IDARTICULO=108609 [ Links ]

Castro-Rodríguez, Y. A. (2023). Marco de referencia de las competencias investigativas para la Educación Médica. Revista Cubana de Información en Ciencias de la Salud, 34(0). https://acimed.sld.cu/index.php/acimed/article/view/2190 [ Links ]

Cater, M., Ferstel, S. D., & O’Neil, C. E. (2016). Psychometric Properties of the Inventory of Student Experiences in Undergraduate Research. The Journal of General Education, 65(3-4), 283-302. doi: 10.5325/jgeneeduc.65.3-4.0283 [ Links ]

Charumbira, M. Y., Berner, K., & Louw, Q. A. (2021). Research competencies for undergraduate rehabilitation students: A scoping review. African Journal of Health Professions Education, 13(1), 52-58. doi: 10.7196/AJHPE.2021.v13i1.1229 [ Links ]

Chen, Q., Huang, C., Castro, A. R., & Tang, S. (2021). Instruments for measuring nursing research competence: A protocol for a scoping review. BMJ Open, 11(2), e042325. doi: 10.1136/bmjopen-2020-042325 [ Links ]

Cobos, F., Peñaherrera, M., & Ortiz, A. M. (2016). Validation of a questionnaire on research-based learning with engineering students. Journal of Technology and Science Education, 6(3), 219-233. doi: 10.3926/jotse.227 [ Links ]

Cota, L. V., & Beltran-Sanchez, J. A. (2021). Propiedades métricas de cuatro subescalas para medir la competencia investigativa de docentes universitarios mexicanos. Innovación Educativa, 21(85), 1. https://dialnet.unirioja.es/servlet/articulo?codigo=8868985 [ Links ]

da Silva, F. C., Gonçalves, E., Arancibia, B. A. V., Bento, G. G., Castro, T. L. da S., Hernandez, S. S. S., & da Silva, R. (2015). Estimators of internal consistency in health research: The use of the alpha coefficient. Revista Peruana De Medicina Experimental Y Salud Publica, 32(1), 129-138. http://www.scielo.org.pe/pdf/rins/v32n1/a19v32n1.pdf [ Links ]

da Silva, R. C., Ribeiro, M. D., Pereira, W., & Mollo, W. R. (2023). Mentoring in research: Development of competencies for health professionals. BMC Nursing, 22(1). doi: 10.1186/s12912-023-01411-9 [ Links ]

Duru, P., & Örsal, Ö. (2021). Development of the Scientific Research Competency Scale for nurses. Journal of Research in Nursing, 26(7), 684-700. doi: 10.1177/17449871211020061 [ Links ]

Echevarría-Guanilo, M. E., Gonçalves, N., & Romanoski, P. J. (2019). Psychometric Properties of Measurement Instruments: Conceptual Basis and Evaluation Methods - Part Ii. Texto & Contexto - Enfermagem, 28. https://www.redalyc.org/journal/714/71465278102/html/ [ Links ]

Escobedo, M. T., Hernández, J. A., Estebané, V., & Martínez, G. (2016). Modelos de ecuaciones estructurales: Características, fases, construcción, aplicación y resultados. Ciencia & trabajo, 18(55), 16-22. doi: 10.4067/S0718-24492016000100004 [ Links ]

García-González, J. R., & Sánchez-Sánchez, P. A. (2020). Diseño teórico de la investigación: Instrucciones metodológicas para el desarrollo de propuestas y proyectos de investigación científica. Información tecnológica, 31(6), 159-170. doi: 10.4067/S0718-07642020000600159 [ Links ]

García-Peñalvo, F. J. (2022). Desarrollo de estados de la cuestión robustos: Revisiones Sistemáticas de Literatura. Education in the Knowledge Society (EKS), 23, e28600-e28600. doi: 10.14201/eks.28600 [ Links ]

Gess, C., Geiger, C., & Ziegler, M. (2019). Social-Scientific Research Competency. European Journal of Psychological Assessment, 35(5), 737-750. doi: 10.1027/1015-5759/a000451 [ Links ]

Gros, S., Canet-Vélez, O., Contreras-Higuera, W., Garcia-Expósito, J., Torralbas-Ortega, J., & Roca, J. (2022). Translation, Adaptation, and Psychometric Validation of the Spanish Version of the Attitudes towards Research and Development within Nursing Questionnaire. International Journal of Environmental Research and Public Health, 19(8), 4623. doi: 10.3390/ijerph19084623 [ Links ]

Groß, J., Wolf, R., Schladitz, S., & Wirtz, M. (2017). Assessment of educational research literacy in higher education: Construct validation of the factorial structure of an assessment instrument comparing different treatments of omitted responses. Journal for Educational Research Online, 9(2), 37-68. http://nbn-resolving.de/urn:nbn:de:0111-pedocs-148962 [ Links ]

Guerrero-Narbajo, Y., Rosario-Quiroz, F., & Santos-Morocho, J. (2023). Diseño y validación de la Escala de Autoevaluación de Habilidades para la Investigación Formativa (EAHIF) en estudiantes universitarios. Revista Cubana de Educación Superior, 42(2), 139-155. https://revistas.uh.cu/rces/article/view/6920 [ Links ]

Hernández, C. A., Gamboa, A. A., & Avendaño, W. R. (2021). Validación de una escala para evaluar competencias investigativas en docente de básica y media. Revista Boletín Redipe, 10(6), 393-406. doi: 10.36260/rbr.v10i6.1335 [ Links ]

Hernández, R. M., Saavedra-López, M. A., Calle-Ramirez, X. M., & Rodríguez-Fuentes, A. (2021). Index of Undergraduate Students’ Attitude towards Scientific Research: A Study in Peru and Spain. Jurnal Pendidikan IPA Indonesia, 10(3), 416-427. doi: 10.15294/jpii.v10i3.30480 [ Links ]

Howard, A., & Michael, P. G. (2019). Psychometric Properties and Factor Structure of the Attitudes Toward Research Scale in a Graduate Student Sample. Psychology Learning & Teaching, 18(3), 259-274. doi: 10.1177/1475725719842695 [ Links ]

Ianni, P. A., Samuels, E. M., Eakin, B. L., Perorazio, T. E., & Ellingrod, V. L. (2021). Assessments of Research Competencies for Clinical Investigators: A Systematic Review. Evaluation & the Health Professions, 44(3), 268-278. doi: 10.1177/0163278719896392 [ Links ]

Ipanaqué-Zapata, M., Figueroa-Quiñones, J., Bazalar-Palacios, J., Arhuis-Inca, W., Quiñones-Negrete, M., & Villarreal-Zegarra, D. (2023). Research skills for university students’ thesis in E-learning: Scale development and validation in Peru. Heliyon, 9(3), e13770. doi: 10.1016/j.heliyon.2023.e13770 [ Links ]

Jager, J., Putnick, D. L., & Bornstein, M. H. (2017). More than Just Convenient: The Scientific Merits of Homogeneous Convenience Samples. Monographs of the Society for Research in Child Development, 82(2), 13-30. doi: 10.1111/mono.12296 [ Links ]

Jeréz, I. E. H., Laza, O. U., Martorell, L. de la C. M., & Hernández, L. L. (2022). Habilidades investigativas de los licenciados en enfermería en el Instituto de Hematología e Inmunología. Revista Cubana de Hematología, Inmunología y Hemoterapia, 38(4). https://revhematologia.sld.cu/index.php/hih/article/view/1706 [ Links ]

Kaur, R., Hakim, J., Jeremy, R., Coorey, G., Kalman, E., Jenkin, R., Bowen, D. G., & Hart, J. (2023). Students’ perceived research skills development and satisfaction after completion of a mandatory research project: Results from five cohorts of the Sydney medical program. BMC Medical Education, 23(1). doi: 10.1186/s12909-023-04475-y [ Links ]

Madadizadeh, F., & Bahariniya, S. (2023). Tutorial on how to calculating content validity of scales in medical research. Perioperative Care and Operating Room Management, 31, 100315. doi: 10.1016/j.pcorm.2023.100315 [ Links ]

Mallidou, A. A., Borycki, E., Frisch, N., & Young, L. (2018). Research Competencies Assessment Instrument for Nurses: Preliminary Psychometric Properties. Journal of Nursing Measurement, 26(3), E159-E182. doi: 10.1891/1061-3749.26.3.E159 [ Links ]

Maric, D., Fore, G. A., Nyarko, S. C., & Varma-Nelson, P. (2023). Measurement in STEM education research: A systematic literature review of trends in the psychometric evidence of scales. International Journal of STEM Education, 10(1), 39. doi: 10.1186/s40594-023-00430-x [ Links ]

McKechnie, D., & Fisher, M. J. (2022). Considerations when examining the psychometric properties of measurement instruments used in health. AJAN - The Australian Journal of Advanced Nursing, 39(2). doi: 10.37464/2020.392.481 [ Links ]

Merino-Soto, C., Fernández-Arata, M., Fuentes-Balderrama, J., Chans, G. M., & Toledano-Toledano, F. (2022). Research Perceived Competency Scale: A New Psychometric Adaptation for University Students’ Research Learning. Sustainability, 14(19), 12036. doi: 10.3390/su141912036 [ Links ]

Mokkink, L. B., Terwee, C. B., Knol, D. L., Stratford, P. W., Alonso, J., Patrick, D. L., Bouter, L. M., & de Vet, H. C. (2010). The COSMIN checklist for evaluating the methodological quality of studies on measurement properties: A clarification of its content. BMC Medical Research Methodology, 10(1), 22. doi: 10.1186/1471-2288-10-22 [ Links ]

Newman, I., Lim, J., & Pineda, F. (2013). Content Validity Using a Mixed Methods Approach: Its Application and Development Through the Use of a Table of Specifications Methodology. Journal of Mixed Methods Research, 7(3), 243-260. doi: 10.1177/1558689813476922 [ Links ]

Nolazco-Labajos, F. A., Guerrero, M. A., Carhuancho-Mendoza, I. M., & Saravia, G. del P. (2022). Competencia investigativa estudiantil durante la pandemia. Revista de Ciencias Sociales, XXVIII(6), 228-243. https://produccioncientificaluz.org/index.php/rcs/article/view/38834 [ Links ]

Oviedo, H. C., & Campo-Arias, A. (2005). Aproximación al uso del coeficiente alfa de Cronbach. Revista Colombiana de Psiquiatría, 34(4), 572-580. http://www.scielo.org.co/scielo.php?script=sci_abstract&pid=S0034-74502005000400009&lng=en&nrm=iso&tlng=es [ Links ]

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Alonso-Fernández, S. (2021). Declaración PRISMA 2020: Una guía actualizada para la publicación de revisiones sistemáticas. Revista Española de Cardiología, 74(9), 790-799. doi: 10.1016/j.recesp.2021.06.016 [ Links ]

Qiu, C., Feng, X., Reinhardt, J. D., & Li, J. (2019). Development and psychometric testing of the Research Competency Scale for Nursing Students: An instrument design study. Nurse Education Today, 79, 198-203. doi: 10.1016/j.nedt.2019.05.039 [ Links ]

Ranganathan, P., & Aggarwal, R. (2020). Study designs: Part 7 - Systematic reviews. Perspectives in Clinical Research, 11(2), 97-100. doi: 10.4103/picr.PICR_84_20 [ Links ]

Roberts, L. D., & Povee, K. (2014). A brief measure of attitudes toward mixed methods research in psychology. Frontiers in Psychology, 5. doi: 10.3389/fpsyg.2014.01312 [ Links ]

Rockinson-Szapkiw, A. (2018). The development and validation of the scholar-practitioner research development scale for students enrolled in professional doctoral programs. Journal of Applied Research in Higher Education, 10(4), 478-492. doi: 10.1108/JARHE-01-2018-0011 [ Links ]

Rodríguez, A., Caurcel, M. J., Gallardo, C. del P., & García, A. (2023). Reconocimiento y actitud hacia la investigación educativa en la Universidad. Revista Interuniversitaria de Formación del Profesorado. Continuación de la antigua Revista de Escuelas Normales, 98(37.1). doi: 10.47553/rifop.v98i37.1.97824 [ Links ]

Smith, S. E., Newsome, A. S., Hawkins, W. A., Bland, C. M., & Branan, T. N. (2020). Teaching research skills to student pharmacists: A multi-campus, multi-semester applied critical care research elective. Currents in Pharmacy Teaching and Learning, 12(6), 735-740. doi: 10.1016/j.cptl.2020.01.020 [ Links ]

Swank, J. M., & Lambie, G. W. (2016). Development of the Research Competencies Scale. Measurement and Evaluation in Counseling and Development, 49(2), 91-108. doi: 10.1177/0748175615625749 [ Links ]

Toro, R., Peña-Sarmiento, M., Avendaño-Prieto, B. L., Mejía-Vélez, S., & Bernal-Torres, A. (2022). Análisis Empírico del Coeficiente Alfa de Cronbach según Opciones de Respuesta, Muestra y Observaciones Atípicas. Revista Iberoamericana de Diagnóstico y Evaluación - e Avaliação Psicológica, 63(2), 17. doi: 10.21865/RIDEP63.2.02 [ Links ]

Torres, S. A., & Manchego, J. L. (2023). Research competencies in Ibero-American university students: A systematic review. LATAM Revista Latinoamericana de Ciencias Sociales y Humanidades, 4(1), 2784-2802. doi: 10.56712/latam.v4i1.454 [ Links ]

Tuononen, T., & Parpala, A. (2021). The role of academic competences and learning processes in predicting Bachelor’s and Master’s thesis grades. Studies in Educational Evaluation, 70, 101001. doi: 10.1016/j.stueduc.2021.101001 [ Links ]

Valderrama, M. W., Pérez, C. L., Llaque, G., & Matute, J. C. (2022). Investigative skills in university students. A systematic review. LACCEI International Multi-Conference for Engineering, Education, and Technology, 1-9. doi: 10.18687/LACCEI2022.1.1.127 [ Links ]

Ventura-León, J., & Peña-Calero, B. N. (2020). El mundo no debería girar alrededor del alfa de Cronbach ≥ ,70. Adicciones, 33(4), 369-372. doi: 10.20882/adicciones.1576 [ Links ]

Vieno, K., Rogers, K. A., & Campbell, N. (2022). Broadening the Definition of ‘Research Skills’ to Enhance Students’ Competence across Undergraduate and Master’s Programs. Education Sciences, 12(10), 642. doi: 10.3390/educsci12100642 [ Links ]

Xiao, L., & Hau, K.T. (2022). Performance of Coefficient Alpha and Its Alternatives: Effects of Different Types of Non-Normality. Educational and Psychological Measurement, 83(1), 5-27. doi: 10.1177/00131644221088240 [ Links ]

Zakariya, Y. F. (2022). Cronbach’s alpha in mathematics education research: Its appropriateness, overuse, and alternatives in estimating scale reliability. Frontiers in Psychology, 13. doi: 10.3389/fpsyg.2022.1074430 [ Links ]

Zangaro, G. A. (2019). Importance of Reporting Psychometric Properties of Instruments Used in Nursing Research. Western Journal of Nursing Research, 41(11), 1548-1550. doi: 10.1177/0193945919866827 [ Links ]

Received: September 18, 2023; Revised: October 11, 2023; Accepted: December 18, 2023; pub: December 31, 2023

text in

text in